With the release of ChatGPT v3.5 a few months ago, business large and small have jumped on the bandwagon to experiment with the AI functionality within their business. The software providers have not been sitting still either. With ChatGPT integration of various Zoho products (i.e. Writer, Social, Desk, SalesIQ, CRM, and Analytics to start with), the functionality has been opened up to small business users.

But is it a good thing?

When practice makes perfect...

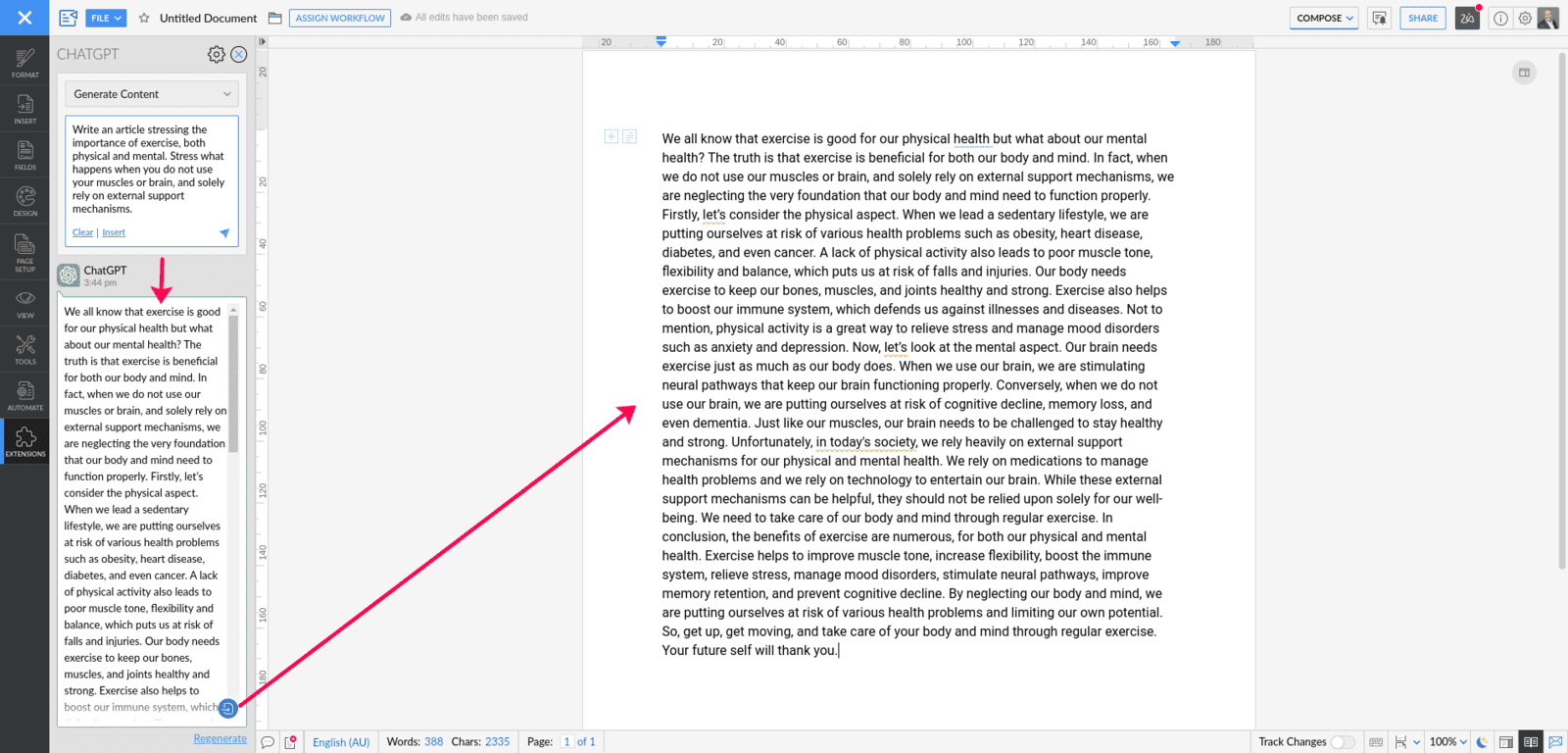

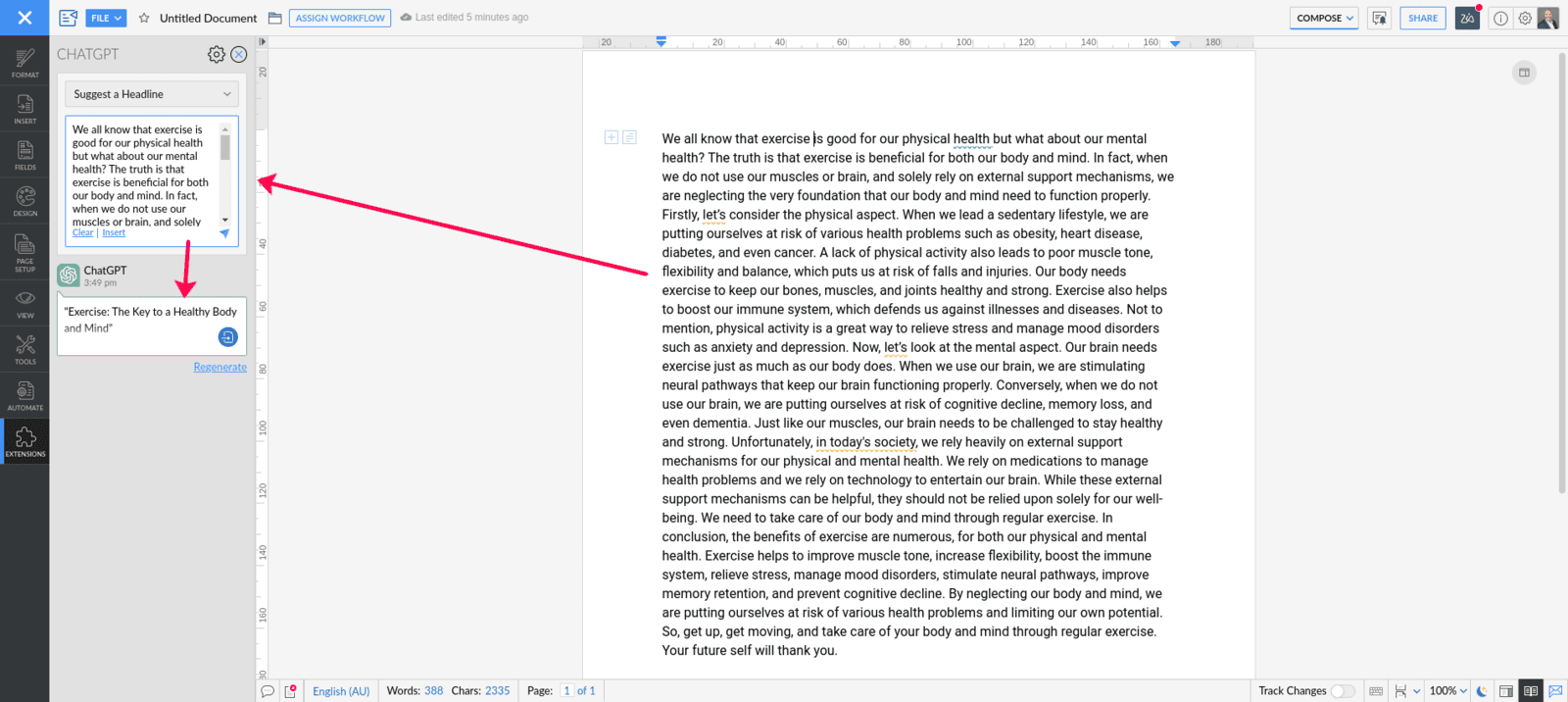

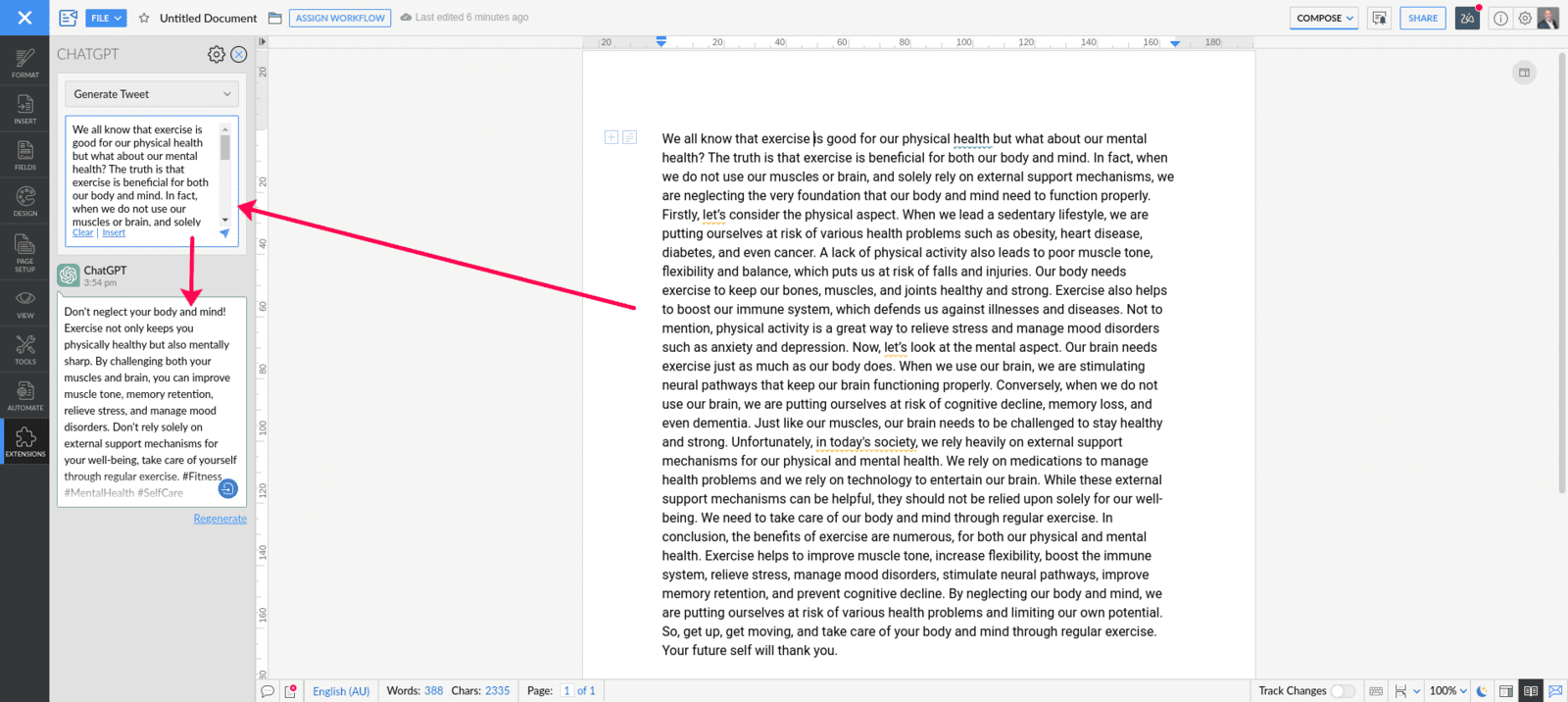

One of the extensions provided by Zoho for ChatGPT provides the ability to generate an article with a simple prompt. I could have written this article with ChatGPT by providing a few sentences in the prompt. This would certainly save me a significant amount of time. I just have to read and correct the article!

Eventually, after a few articles, I would just stop reading it. Blogs would be posted without my input. I have not spent a minute collating thoughts, committing them to text, and where needed, rephrasing the text.

Skills unused are skills deteriorating. How long will it take before I would be unable to generate content without AI?

In the carousel of images below, you see Zoho Writer use ChatGPT to generate an article, suggest a headline, and a Tweet to promote the article.

Outsourced or not, YOU are still responsible

The above example is a simple article - innocuous enough. But who knows what the AI concocts from the data it has access to. After all, computers are incredibly literal machines - give them a goal, and they'll try to reach it, consequences be damned. What if you let AI make your financial decisions, or predict the next "BIG THING" in your industry. This is all well and good, but REALLY? Let's unpack this a bit further.

An edge in financial results does indeed factor in the quality of your data, the quality of your interpretation of that data, the time it takes to execute your decision, and not a small amount of luck. Do you trust the AI, to have the highest quality of data? And if so, would the AI make the same decision over and over again if you had the same data (assuming it did indeed lead to the optimum outcome)? If so, then timing is the main remaining factor in steering the outcome - and since timing is in limited control of you as the human in the chain, timing becomes a substitute for "luck", either good or bad.

Would you let AI predict the next "BIG THING" in your industry? Assuming your competitors have the same access to data, the same access to the AI companion - would they not have the same result for the next "BIG THING"? Or is the AI just guessing? In which case one could argue - is it really "better" than human ingenuity, or just more "convenient"?

From failure to innovation

Notable innovations often are derived from a significant failure. The ubiquitous "Post-It" note from 3M would never have seen the light of day if it weren't for a monumental failure to generate a new "super-glue" ("Post-It" notes can be used for many things, but holding two objects together under stress is not one of them). Human ingenuity is one of the many aspects of consciousness we study, but have yet to truly understand.

Do you blindly trust something you do not understand?

There are three variables that require your trust, when it comes to the AI. Do you trust those that "control it", do you trust the process it follows, and finally, do you trust the data set it uses?

ChatGPT by OpenAI recently received a significant investment from Microsoft. So much so, that it probably has a controlling stake in the company. Microsoft is not exactly synonymous with "Trustworthy Business", judging from past behaviour. While it is a free market, and anti-competitive behaviour can be expected (within the boundaries of the applicable laws), Microsoft also has made the transformation from a software company to a data company. Do you trust what OpenAI and by proxy, Microsoft do with your data? Read the terms and conditions carefully - in particular to the things that are NOT stated.

As for the process, even the AI experts are in the dark. For example, in a recent interview on 60 Minutes, Sundar Pichai raised concerns that AI Hallucinations are not fully understood (i.e. the AI "made up" something from nothing, and represents it as factual). Sam Altman (OpenAI) stated that if the AI would be able to destroy the world - they would probably "slow it down". Taken these two observations in mind, would you trust the "black box" with your business or life decisions?

Access to data is the third element of a trustworthy outcome. ChatGPT is purposefully restricted to only include "Safe" data. the question is: who determines what constitutes of "Safe" data? One would have thought that data is data. These are facts that with the current observations cannot be disputed. At the time of Copernicus, a helio-centric view of the world was considered heresy, therefore the data-points leading to that conclusion was what we now would consider "unsafe" data. Similar for the data reflecting to the 2020 US election, the efficacy of the Covid-19 "Vaccines", and the correlations with these and the excess mortality. The data does not change - but you are not supposed to look at it. I don't know about you, but "not supposed to" sounds like an invitation. Taking an AI like ChatGPT, and limiting access to only certain data-sets, and the AI will not look at that as an invitation, but an instruction.

Benefit or Risk?

"Blessed are the Luddites, for they shall inherit the Earth", or "Adapt to AI, or Die"? Usually, revolutionary changes like these rarely are absolutes. AI can be a significant benefit to the running of a business, large or small. The industrial revolution did not result in mass unemployment - it enabled the transition from serfdom to economic mobility. But with every opportunity, there is risk, and with every risk comes opportunity.

A small business does not have to jump on the AI train, or be thrown under it. It is all very interesting, and a cool novelty to show of, but nothing is essential. And if some essential business function is taken over by AI, be very wary - when you don't exercise your business mind, AI will cause it to atrophy.